This competition aims to promote the advancement of Embodied AI technology for robots in virtual environments. Participants must integrate various technical components applicable to robots to perform specific tasks in a given virtual environment. These technologies will be developed and tested in simulation environments before being effectively utilized in real-world settings.

The competition challenges participants to learn and deploy embodied AI systems for robots in the 'Meta-Sejong' virtual environment developed by Sejong University.

The main task focuses on controlling an autonomous mobile robot equipped with a robotic arm to identify, classify, and collect various objects scattered throughout the environment.

The entire mission consists of 2 stages, where the results of the previous stage's mission are used as input for the next stage. Participating teams can choose stages that match their capabilities to participate in the competition. You don't have to complete all stages, so don't be discouraged. The two stages of the mission are as follows:

- Stage 1: Analyze information and video data from fixed cameras (CCTV) installed in the Meta-Sejong virtual environment to identify abandoned waste and calculate its location

- Stage 2: Analyze the optimal movement path to effectively collect waste identified in Stage 1, send movement commands to the robot, and perform the task of picking up waste using the robotic arm and placing it in the appropriate waste container based on waste type

This section provides detailed explanations of the tasks that participants must perform through this competition. As explained in the introduction section, the mission consists of a total of 2 stages.

Mission Stages

Stage 1

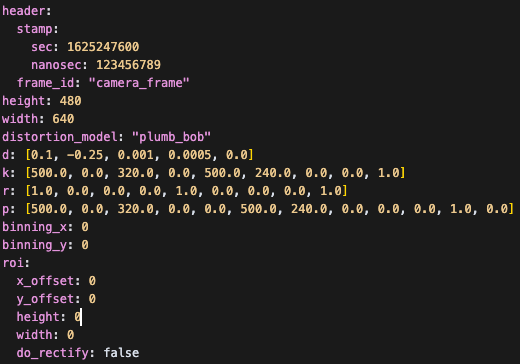

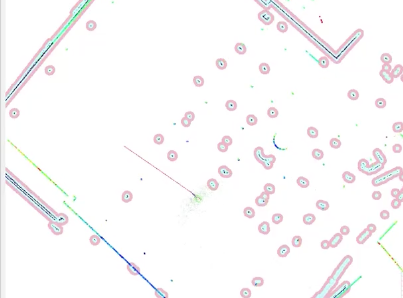

Video footage from CCTV cameras installed in the virtual environment and information about the CCTV cameras are provided as ROS2 messages. Participants must use image object recognition technology to identify the location of abandoned waste in the environment from the camera footage. The identified waste location must be converted into coordinates in the virtual environment by combining it with information such as camera specifications and installation location. In Stage 1, the accuracy of inferring the location and type of abandoned waste by applying image analysis technology is evaluated.

Types of Waste to Analyze

Object Detection in Video

Camera Information

Identifying Waste Location through CCTV Footage

Stage 2-1

Only participants who have successfully completed Stage 1 can participate in Stage 2 using the Stage 1 inference results. Based on the waste location information inferred in Stage 1, design a movement path that minimizes the total movement path and evaluate the process of collecting all objects through robot arm control within the time limit.

Occupancy Map for Path Planning

Navigation Example using SLAM

Stage 2-2

Only participants who have successfully completed Stage 1 and Stage 2 can perform Stage 3 missions while moving along the path designed in Stage 2. After arriving at each node while moving along the path designed in Stage 2, perform the task of picking up waste using the camera and robotic arm mounted on the robot and classifying it. The evaluation assesses how many pieces of waste were successfully collected. Note that longer collection times may result in point deductions.

Robot Movement Video

Pick&Place Video with Robotic Arm

All participants must submit a demo paper of up to 2 pages using the IEEE conference format. The paper must describe the system design, technical approach, key challenges, and results obtained in the Meta-Sejong virtual environment. Please submit a two-page abstract for 'Student Challenge Track' adhering to IEEE's formatting standards. Detailed guidelines are available in the IEEE submission instructions. Your submission should include all authors' names, affiliations, and email addresses, as a double-blind review is not required. At least one author must attend MetaCom 2025 in person to present the work during the challenge session. Accepted papers will be included in the IEEE MetaCom 2025 Proceedings and submitted to IEEE Xplore, provided they are presented on-site.

Challenge paper submissions should be made via this link.

Participants must submit the developed code and workshop paper as final deliverables. The winners will be announced based on the combined scores from mission performance through execution and paper review. Winners must present their papers at the conference. Winners will be awarded competition certificates according to their rankings.

- Paper Submission Deadline: 8 July 2025 (24:00 UTC) 30 June 2025 (24:00 UTC)

- Final Code Submission Deadline: 8 July 2025 (24:00 UTC) 30 June 2025 (24:00 UTC)

- Winners Announcement: 15 July 2025 (24:00 UTC)

IEEE MetaCom 2025

IEEE MetaCom 2025

International Conference on Metaverse Computing, Networking and Applications 2025(IEEE MetaCom 2025) is the host conference of the MARC(Meta-Sejong AI Robotics Challenge) 2025 event